Let us discuss what is CCNP in detail. In today’s digital age, the demand for robust IT networking has never been more crucial. As businesses increasingly hinge on technology for operations and communication, a seamless and effective network infrastructure is vital to ensure connectivity, security, and scalability. The intricate interconnection of devices necessitates adept professionals capable of navigating this intricate landscape to uphold optimized and secure networks.

In this landscape, the Cisco Certified Network Professional (CCNP) certification emerges as a significant player. CCNP furnishes individuals with a holistic skill set, delving into advanced networking concepts and technologies. This certification stands as a linchpin for professionals aspiring to excel in roles spanning from network engineering to cybersecurity. For an in-depth understanding of CCNP and its pivotal role in the ever-evolving realm of networking, read the blog to its conclusion. Uncover the nuanced details that position CCNP as a cornerstone in the journey toward a prosperous career in IT networking.

What is CCNP (Cisco Certified Networking Professional)?

CCNP, or Cisco Certified Network Professional, the networking’s professional-level certification, stands as a globally acknowledged certification within the realm of information technology and networking. Developed by Cisco Systems, CCNP serves to validate the advanced competencies of networking professionals who are entrusted with tasks such as planning, implementing, verifying, and troubleshooting intricate network solutions.

Positioned as a progression beyond the CCNA (Cisco Certified Network Associate), CCNP certification is considered the one that delves deeper into the intricacies of networking concepts and technologies. It caters to individuals who have already established a solid understanding of networking fundamentals and aspire to augment their expertise, particularly in areas like routing and switching, security, wireless networking, and collaboration.

A noteworthy aspect of CCNP is its modular structure, offering candidates the flexibility to specialize in various tracks according to their career aspirations. For instance, CCNP Enterprise, CCNP Security, CCNP Data Center, CCNP Service Provider, and CCNP Collaboration.

Attaining CCNP certification typically requires candidates to pass a series of exams specific to their chosen track. Cisco regularly updates its certification programs to stay aligned with industry trends and emerging technologies, ensuring that CCNP-certified professionals are well-prepared to meet the evolving demands of the networking landscape.

Earning CCNP certification can unlock a plethora of career opportunities, as it is widely recognized by employers globally. It serves as a testament to a candidate’s dedication to ongoing learning and a high level of proficiency in designing, implementing, and managing complex network solutions across various domains. In the dynamic IT industry, CCNP remains a valuable credential for networking professionals striving to stay at the forefront of their careers.

What is the importance of the Cisco CCNP certification?

The importance of the Cisco CCNP certification is as follows-

- Validation of Advanced Skills:

Attaining a CCNP’s professional certification signifies an individual’s possession of advanced skills and in-depth knowledge in networking. It surpasses the foundational aspects covered in the CCNA certification, showcasing a heightened proficiency in planning, implementing, managing, and troubleshooting intricate network solutions.

- Global Recognition:

Cisco’s standing as a leading global provider of networking technologies lends widespread recognition and respect to the CCNP certification. It stands as a valuable credential sought by employers in the industry, ensuring that teams are equipped with the requisite skills to navigate sophisticated network environments.

- Career Advancement:

CCNP certification substantially broadens career horizons, unlocking access to higher-level positions in networking and IT. Roles such as network engineer, network architect, systems engineer, or IT manager become more attainable, with many organizations giving preference to candidates holding CCNP certification for roles involving the design and management of complex network infrastructures.

- Specialization in Various Tracks:

CCNP’s diverse tracks enable individuals to specialize in areas like enterprise networking, security, data center technologies, service provider networks, and collaboration. This specialization empowers professionals to align their skills with specific industry demands and personal career objectives.

- Adaptation to Emerging Technologies:

Cisco’s regular updates to certification programs ensure that CCNP-certified professionals stay abreast of the latest technologies and industry trends. This adaptability equips them with knowledge in emerging areas such as Software-Defined Networking (SDN), network automation, and other advancements, ensuring their relevance in the rapidly evolving IT landscape.

- Demonstration of Commitment:

Achieving CCNP certification is a testament to an individual’s dedication, involving rigorous study and successful completion of challenging exams. Employers view this commitment positively, recognizing a professional’s dedication to continuous learning and staying current with advancements in networking technology.

- Increased Confidence and Credibility:

CCNP certification fosters heightened confidence both in professionals and employers. It serves as a tangible acknowledgment of an individual’s capability to handle complex networking tasks, contributing to their overall credibility within the industry.

- Networking Community Involvement:

CCNP certification provides access to a community of similarly accomplished professionals. Networking events, forums, and resources offered by Cisco and the broader community facilitate collaboration, knowledge sharing, and staying informed about industry developments.

What is the difference between CCNA and CCNP?

The difference between CCNA and CCNP is as follows-

- Foundational Knowledge

CCNA, or Cisco Certified Network Associate, is an entry-level certification that provides a solid foundation in networking concepts.

CCNP, or Cisco Certified Network Professional, is a professional-level certification that takes a more advanced approach, delving into intricate networking technologies and solutions.

- Experience Level

CCNA is tailored for individuals entering the networking field with limited experience.

CCNP is designed for seasoned networking professionals, demanding a higher level of expertise and hands-on experience.

- Exams and Prerequisites

CCNA typically requires passing a single exam.

CCNP involves multiple exams, each focusing on specific advanced topics within a chosen specialization.

- Specialized Tracks

CCNA is a general certification providing a broad overview of networking.

CCNP offers specialized tracks such as enterprise, security, data center, service provider, and collaboration, allowing professionals to specialize based on their interests.

- Job Roles

CCNA qualifies individuals for roles like network administrator, support engineer, or entry-level positions.

CCNP is suited for senior roles, including network engineer, systems engineer, or network architect, requiring a deeper level of expertise.

- Depth of Topics

CCNA covers fundamental topics like routing, switching, and basic security.

CCNP delves deeper into these subjects and introduces advanced concepts like VPNs, automation, and troubleshooting.

- Hands-On Proficiency

CCNA emphasizes practical skills for configuring and managing network devices.

CCNP expects a higher level of proficiency in hands-on troubleshooting, network design, and complex configurations.

- Networking Technologies

CCNA provides an overview of various networking technologies.

CCNP delves into specific technologies relevant to the chosen specialization, offering a more in-depth understanding.

- Certification Progression

CCNA is often a prerequisite for pursuing CCNP, creating a natural progression for individuals looking to advance their networking careers.

- Employer Expectations

CCNA is sought for entry-level positions, showcasing foundational skills.

CCNP is preferred for mid-to-senior level roles, indicating a higher level of expertise and experience.

- Salary Considerations

CCNA holders may receive lower salaries compared to CCNP-certified professionals due to differences in experience and proficiency.

- Network Design Proficiency

CCNA introduces basic principles of network design.

CCNP involves a more comprehensive understanding and application of network design principles.

- Troubleshooting Expertise

CCNA emphasizes basic troubleshooting skills.

CCNP requires advanced troubleshooting capabilities, including addressing complex network issues.

- Time and Effort Investment

CCNA is generally quicker to attain, focusing on foundational concepts.

CCNP demands more time and effort due to its advanced nature and the requirement for multiple exams.

- Recertification Requirements

Both CCNA and CCNP certifications necessitate recertification, with differences in time frames and processes.

- Automation and SDN Focus

CCNA introduces basic concepts of automation and Software-Defined Networking (SDN).

CCNP delves deeper into these topics, emphasizing practical implementation.

- Job Market Demand

CCNA certification meets the demand for entry-level roles.

CCNP certification is often sought for positions requiring advanced networking skills, aligning with the demand for experienced professionals.

- Networking Community Engagement

CCNA holders are part of the broader networking community.

CCNP certification often leads to involvement in a more specialized and advanced professional network.

- Role in IT Projects

CCNA professionals may contribute to the execution of networking projects.

CCNP-certified individuals often play a key role in planning, designing, and managing complex IT projects.

- Networking Equipment Configuration

CCNA training focuses on configuring basic networking devices.

CCNP training demands proficiency in configuring and managing a broader range of complex networking equipment.

What are the CCNP courses track?

Cisco has established several CCNP certification tracks, each concentrating on specific facets of networking. However, it is crucial to acknowledge that Cisco might have introduced new tracks or modifications since then. The primary CCNP tracks include:

Tailored for professionals engaged in enterprise networking solutions, this track explores advanced subjects in routing, switching, and troubleshooting. Key technologies encompass advanced routing protocols, Software-Defined Networking (SDN), and network automation.

Geared towards individuals specializing in network security, this track addresses topics such as the implementation and management of security solutions, Virtual Private Networks (VPNs), identity management, and secure access.

Customized for professionals immersed in data center technologies, this track encompasses subjects like data center infrastructure, automation, storage networking, and unified computing.

Directed at individuals dealing with service provider networks, this track delves into advanced topics related to service provider infrastructure, services, and edge networking.

CCNP Collaboration Certification

Crafted for professionals immersed in collaboration technologies, this track encompasses voice, video, and messaging applications. Topics span collaboration infrastructure, messaging, and call control.

CCNP DevNet Certification

Centered on network automation and programmability, this track caters to professionals with an interest in software development, automation, and programmability within the networking context.

How to choose the right CCNP career path?

Selecting the appropriate CCNP (Cisco Certified Network Professional) career path involves a thoughtful evaluation of your interests, skills, and professional aspirations within the context of the available CCNP tracks. Here are the steps to guide you in making a well-informed decision:

- Reflect on Your Interests

Consider your areas of interest within the broad field of networking. Whether it is security, collaboration technologies, data center solutions, or service provider networks, identifying your passions will help narrow down the CCNP track that suits you best.

- Assess Your Skills and Experience

Evaluate your current skills and experience in the realm of networking. If you already possess a solid foundation in a specific area, such as security or data center technologies, it might make sense to opt for a CCNP track aligned with your existing expertise.

- Define Your Career Goals

Clearly outline your long-term career goals. If you have a specific role in mind, such as a network engineer, security specialist, or data center architect, choosing a CCNP track that aligns with the requisite skills for that role is advantageous.

- Explore CCNP Tracks

Dive into the details of each CCNP track. Familiarize yourself with the topics covered, the technologies involved, and the skills emphasized in each specialization. Cisco’s official website provides comprehensive information on each CCNP track.

- Consider Industry Demand

Research the current and anticipated demand for skills in different CCNP tracks. Prioritize areas where there is a growing need for expertise to enhance your job prospects in the evolving landscape.

- Check Certification Prerequisites

Verify if there are any prerequisites for the CCNP tracks you are interested in. Some tracks may require a CCNA certification as a foundational requirement, so ensure you meet the necessary criteria.

- Engage with the Networking Community

Connect with the networking community and make use of available resources. Attend industry events, participate in forums, and seek advice from professionals who have pursued specific CCNP tracks. Their experiences can offer valuable insights.

- Consider Emerging Technologies

Evaluate the relevance of emerging technologies within each CCNP track. Take into account areas like automation, software-defined networking (SDN), and cloud integration, as these are increasingly crucial in the networking field.

- Explore Training and Study Materials

Investigate the availability of training resources and study materials for each CCNP track. Opt for learning resources that align with your preferred learning style and availability.

- Develop a Study Plan

Create a study plan that suits your schedule and learning preferences. Break down the certification requirements into manageable milestones, and allocate time for study, practice, and exam preparation.

- Seek Mentorship

If possible, seek guidance from mentors or professionals who have pursued CCNP certifications. Their advice and experiences can provide valuable perspectives on the challenges and rewards associated with different CCNP tracks.

- Remain Flexible

Keep in mind that the technology landscape evolves. While it is crucial to choose a CCNP track aligned with your current goals, staying flexible allows you to adapt to emerging trends and technologies.

Discuss the CCNP exam structure in detail.

The structure of the (Cisco Certified Network Professional) CCNP certification exam comprises several key components. It is essential to note that Cisco can introduce changes or updates to the exam structure at any time. Here is a detailed breakdown of the CCNP exam structure:

- CCNP Core Exam

- The CCNP certification requires candidates to complete a core exam, serving as a foundational element applicable to all CCNP tracks.

- The core exam assesses both fundamental and advanced networking topics, covering areas like routing, switching, security, automation, and network design.

- Its purpose is to validate candidates’ overall proficiency in core networking skills.

- Specialization Exams

- Following the successful completion of the core exam, candidates can choose a specific CCNP track based on their preferences and career objectives.

- Each CCNP specialization has its set of concentration exams, delving deeper into the specific technologies and concepts associated with that particular track. Concentration exams enable candidates to showcase expertise in a specific domain, such as enterprise networking, security, data center technologies, service provider networks, collaboration, or DevNet (network automation and programmability).

- Concentration Exams

- Concentration exams within a CCNP track cover advanced topics relevant to that specialization.

- Candidates must pass one or more concentration exams, in addition to the core exam, to attain CCNP certification in their chosen track.

- These exams allow individuals to demonstrate in-depth knowledge and skills in a specific area within the broader CCNP track.

- Exam Format

- CCNP exams typically include a diverse range of question types, such as multiple-choice questions (MCQs), drag-and-drop questions, simulations, and scenario-based questions.

- The exams aim to assess both theoretical understanding and practical skills, requiring candidates to apply networking concepts in real-world scenarios.

- Duration and Passing Score

- The duration of CCNP exams varies, with candidates allotted a specific amount of time for each exam.

- To pass, candidates must achieve a minimum passing score, the specific value of which may differ for various exams.

- Exam Delivery

- Pearson VUE, a global testing provider partnered with Cisco, typically administers CCNP exams.

- Candidates can take the exams at Pearson VUE testing centers or opt for online proctoring, allowing them to take the exam remotely.

- Recertification Requirements

CCNP certifications remain valid for three years. To maintain certification status, individuals must recertify either by passing the current version of the CCNP certification course core exam or by completing a higher-level exam, such as a CCIE (Cisco Certified Internetwork Expert) written or lab exam.

- Study Material

The Cisco CCNP exam can be prepared via online training from diverse platforms available throughout the world. One of the best such platforms is Network Kings, where you can prepare for the CCNP certification and learn directly from real-time experienced engineers.

What are the prerequisites for CCNP?

To pursue the Cisco Certified Network Professional (CCNP) certification, you will need to meet the given requirements-

- CCNA Certification

You should have a valid Cisco Certified Network Associate (CCNA) certification, as CCNP builds on the foundational knowledge provided by CCNA.

- Experience

It is recommended to have hands-on experience with Cisco networking products and technologies. The required level of experience may vary depending on the specific CCNP track.

- Understanding of Exam Topics

Familiarize yourself with the exam topics outlined in the official blueprints for the specific CCNP track you are interested in.

- Networking Knowledge

A strong understanding of networking concepts, protocols, and technologies is crucial. This includes knowledge of routing, switching, security, and related areas.

What are the benefits of the CCNP certification?

Take the CCNP certification course to avail the benefits such as follows-

- Extensive Networking Knowledge

CCNP signifies a deep understanding of networking, covering advanced concepts in routing, switching, and security. It offers a thorough grasp of Cisco technologies.

- Career Progression

Globally recognized, CCNP enhances professional credibility and opens doors to career advancement opportunities.

- Specialization Options

CCNP provides various tracks, like Enterprise, Security, and Data Center. This allows individuals to specialize in areas that align with their career objectives.

- Enhanced Employability

Many employers highly value Cisco certifications, with CCNP often being a requirement or a strong preference for networking roles. Holding a CCNP certification makes individuals more appealing to potential employers.

- Salary Boost

CCNP-certified professionals typically command higher salaries due to their demonstrated expertise, setting them apart in the job market.

- Networking Community Involvement

CCNP certification grants access to a community of certified professionals, fostering knowledge sharing, collaboration, and staying updated on industry trends.

- Skills Validation

CCNP serves as a tangible validation of skills and knowledge in Cisco networking technologies, assuring employers and peers.

- Keeping Abreast of Technology

Cisco regularly updates its certifications to align with the latest technologies and best practices. Maintaining CCNP certification ensures professionals stay current and relevant in the field.

- Access to Cisco Resources

CCNP certification offers access to official Cisco resources, including documentation, training materials, and support. This access helps certified professionals stay informed about the latest developments in Cisco technologies.

- Personal and Professional Growth

Pursuing and obtaining CCNP certification demands dedication and effort. The process contributes to personal and professional growth, enhancing problem-solving skills and technical capabilities.

What are the preparation tips for the CCNP exam?

The preparation tips for the CCNP exam are as follows-

Developing a Study Plan

Start by checking out what topics are on the CCNP exam. Craft a study plan that covers all these areas. Break down your study sessions into bite-sized pieces, focusing on one topic at a time. Allocate specific time slots each day or week, taking into account your work and personal commitments. A well-thought-out study plan helps keep you organized and ensures you cover everything you need for the exam.

Utilizing Online Study Materials

Internet and online training platforms have got you covered with official study materials tailored for each CCNP exam. These materials are designed to cover the crucial concepts. They are crafted by experts and give you a deep dive into the technologies and topics you will face in the exam.

Hands-on Practice and Lab Exercises

Get practical experience—it is key to CCNP exam success. Set up a lab environment using Cisco devices and simulators like Packet Tracer or GNS3. Hands-on practice reinforces what you have learned theoretically and lets you troubleshoot and configure network scenarios. Play around with different configurations, implement solutions, and simulate real-world scenarios to amp up your skills. Lab exercises are a must for tackling the practical aspects of CCNP exams.

Joining Online Training at Network Kings

Consider an online platform like Network Kings. Great choice! Here is how to make the most of it:

- Structured Curriculum: Network Kings likely follows a curriculum aligned with CCNP exam objectives. Stick to the course structure to make sure you cover all the necessary topics.

- Live Sessions and Recordings: Jump into live training sessions for interactive learning. Missed one? No worries—catch up with recorded sessions at your own pace.

- Expert Instructors: Network Kings probably has seasoned instructors with loads of insights, tips, and real-world examples.

What skills will you learn with the CCNP Cisco certification?

The skills you will learn with the CCNP certification are as follows-

- Mastery of Advanced Routing and Switching:

Acquiring in-depth knowledge of advanced routing and switching concepts, including dynamic routing protocols and advanced switching techniques.

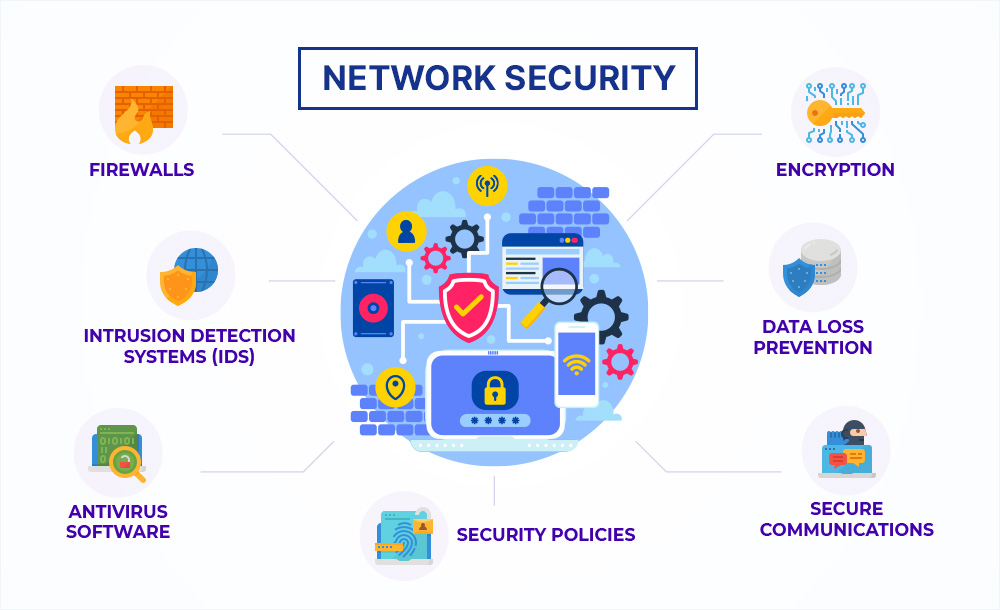

- Expertise in Network Security:

Learning to implement and manage security measures for networks, encompassing firewalls, VPNs, and intrusion prevention systems.

- Understanding Quality of Service (QoS):

Implementing Quality of Service mechanisms to effectively prioritize and manage network traffic.

- Proficiency in Wireless Networking:

Developing expertise in planning, implementing, and managing wireless networks, including considerations for security and effective troubleshooting.

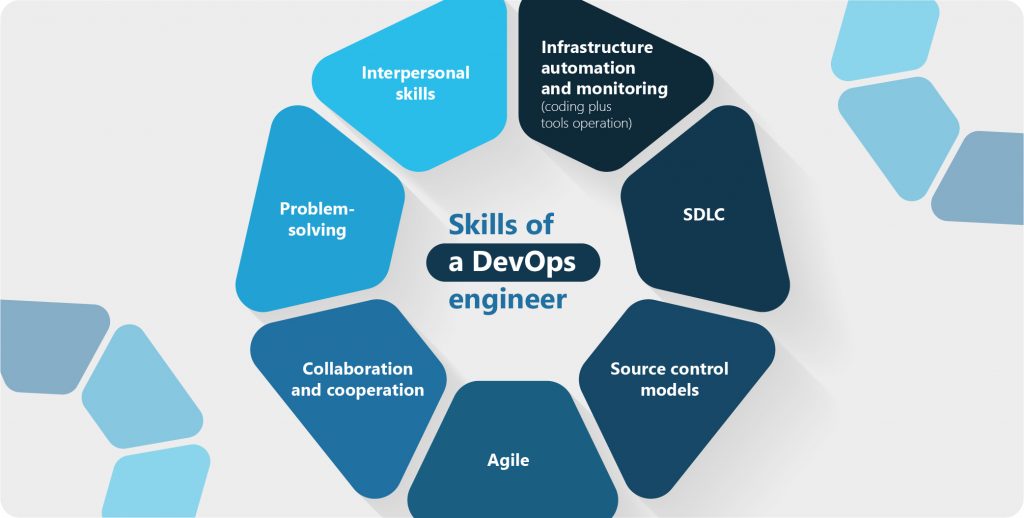

- Skills in Network Automation:

Developing the ability to automate network tasks using tools like Python and Ansible, streamlining processes and enhancing efficiency.

- Integration of Virtualization and Cloud:

Learning to integrate and manage networking in virtualized environments and cloud platforms, such as Cisco’s SD-WAN and cloud-based solutions.

- Refining Troubleshooting Skills:

Honing troubleshooting skills for diagnosing and efficiently resolving complex network issues.

- Understanding Wide Area Network (WAN) Technologies:

Gaining knowledge of various WAN technologies, such as MPLS, DMVPN, and SD-WAN, to ensure efficient and secure connectivity.

- Expertise in Multicast Routing:

Understanding multicast routing protocols and techniques to facilitate efficient content delivery across networks.

- Development of Network Design and Architecture Skills:

Acquiring skills in designing and implementing scalable and resilient network architectures.

- IPv6 Implementation Proficiency:

Learning to plan and implement IPv6 networks, is a crucial skill as the adoption of IPv6 becomes increasingly important.

- Implementation of Policy-Based Routing (PBR):

Understanding and implementing policy-based routing to control the flow of traffic based on defined policies.

- Optimizing Network Performance:

Developing skills in optimizing network performance through techniques like traffic engineering and bandwidth management.

- Collaboration Technologies Expertise:

Depending on the chosen track, acquiring skills in collaboration technologies, including VoIP and unified communications.

- Mastering Enterprise Network Management:

Learning the implementation and management of network management tools and techniques for efficient network monitoring and administration.

What are the future trends in CCNP?

The future trends in CCNP are as follows-

- Emphasis on Network Automation and Programmability:

The ability to automate tasks and use programming languages like Python is becoming increasingly important in network management. Future CCNP professionals may find it valuable to have a strong grasp of automation tools and orchestration frameworks.

- Rise of Software-Defined Networking (SDN):

SDN is transforming how networks operate. Future CCNP candidates might need to delve deeper into SDN principles, including understanding controllers and software-defined architectures.

- Focus on Cloud Networking:

With services migrating to the cloud, CCNP professionals may encounter a growing need for expertise in cloud networking. Understanding cloud platforms, integration strategies, and managing hybrid or multi-cloud environments could become more prevalent.

- Integration of Network Security:

Security remains a top concern, and future CCNP professionals might be expected to seamlessly integrate security practices into network design and management, addressing emerging threats and ensuring compliance.

- Adoption of Edge Computing:

As edge computing gains traction, CCNP professionals may need to adapt network architectures to support distributed computing at the edge. This involves optimizing for low-latency and high-performance edge applications.

- Impact of 5G Networks:

The rollout of 5G introduces new challenges and opportunities in networking. CCNP professionals may need to understand how 5G influences network design, performance, and security.

- Incorporation of AI and Machine Learning:

AI and machine learning are playing a growing role in network management. Future CCNP professionals might need to incorporate these technologies for predictive analysis, anomaly detection, and network optimization.

- Addressing Challenges of IoT:

The increasing number of IoT devices presents challenges in scalability, security, and management. CCNP professionals may need to adapt to support IoT deployments effectively.

- Adoption of Zero Trust Networking:

The Zero Trust model, assuming no inherent trust, is gaining popularity. Future CCNP professionals may need to design networks aligned with zero-trust principles for enhanced security.

- Commitment to Continuous Learning and Certifications:

Given the dynamic nature of technology, CCNP professionals are encouraged to foster a culture of continuous learning. Staying updated with the latest technologies and pursuing relevant certifications beyond CCNP can be pivotal for ongoing career growth.

Where can I get the best CCNP training?

To enroll on the CCNP courses, one must choose a training platform with the best mentors providing quality education. And one such platform is Network Kings.

The pros of choosing Network Kings for the CCNP course training program are as follows-

- Learn directly from expert engineers

- 24*7 lab access

- Pre-recorded sessions

- Live doubt-clearance sessions

- Completion certificate

- Flexible learning hours

- And much more.

NOTE: CCNP is a part of our Network Engineer Master Program. Click to Enroll Today!

What are the available job opportunities after the CCNP course?

The CCNP certification program prepares one for high-paying job roles in the industry. Hence, the top available job opportunities for the CCNP certified are as follows-

1. Network Engineer

2. Network Administrator

3. Systems Engineer

4. IT Consultant

5. Network Analyst

6. Senior Network Engineer

7. Network Architect

8. Network Security Engineer

9. Wireless Network Engineer

10. Data Center Engineer

11. Collaboration Engineer

12. Cloud Solutions Engineer

13. Network Manager

14. IT Manager / Network Technician

15. Cybersecurity Analyst

16. VoIP Engineer

17. Senior Systems Engineer

18. IT Project Manager

19. Technical Consultant

20. Network Operations Center (NOC) Engineer

What are the salary aspects after the CCNP course?

The salary aspects after the CCNP course in different countries are as follows-

- United States: USD 80,000 – USD 120,000 per year

- Canada: CAD 70,000 – CAD 100,000 per year

- United Kingdom: GBP 40,000 – GBP 70,000 per year

- Australia: AUD 80,000 – AUD 110,000 per year

- Germany: EUR 50,000 – EUR 80,000 per year

- India: INR 6,00,000 – INR 15,00,000 per year

- Singapore: SGD 60,000 – SGD 90,000 per year

- United Arab Emirates: AED 120,000 – AED 200,000 per year

- Brazil: BRL 80,000 – BRL 150,000 per year

- South Africa: ZAR 400,000 – ZAR 800,000 per year

- Japan: JPY 6,000,000 – JPY 10,000,000 per year

- South Korea: KRW 60,000,000 – KRW 100,000,000 per year

- China: CNY 150,000 – CNY 300,000 per year

- Mexico: MXN 400,000 – MXN 800,000 per year

- Netherlands: EUR 50,000 – EUR 90,000 per year

Wrapping Up!

Opting for the Cisco Certified Network Professional (CCNP) certification proves to be a strategic move for career advancement. Armed with a versatile skill set that spans advanced networking technologies, CCNP-certified individuals find themselves in a favorable position for diverse global roles. As technology continues to progress, CCNP stands as a valuable asset, empowering professionals to adeptly manoeuvre the ever-changing field of networking and make substantial contributions to the success of organizations on a global scale.

Therefore, enroll on our Network Engineer Master Program to conquer the world of IT networking. Feel free to contact us for any help required.

Happy Learning!