Greetings! We are here to talk about Amazon Relational Database Service which is part of Amazon Web Services (AWS). In this blog, we will offer a deep look at the features and benefits that RDS has in store for us. Not just that, but also how it can support remote service applications and relational database hosting. Furthermore, you get an insight into different types of databases supported by RDS alongside some tips on getting started with it. No more pondering over – let us find out what is RDS in AWS and what it has in stock for us then!

Exploring the Basics of RDS in AWS: What is RDS in AWS

Getting to grips with the fundamentals of RDS in AWS is essential for anyone mulling over using this kind of cloud platform. Amazon Web Services (AWS) serves up Relational Database Service (RDS), which enables you to store data in an uncomplicated and effective manner.

Thanks to RDS, users can stay away from the hassle involved with controlling complex databases on their own network infrastructure, so they are free to concentrate on constructing applications instead. But what does that mean? In simple terms: RDS is a cloud-based database service that steps it up when it comes to managing your relational databases more easily – how great is that?!

With RDS, you can create and manage databases hassle-free – no need to worry about hardware resources or provisioning. It comes with some great features too, like automated backups, encryption and high availability which make it an ideal go-to choice for developers looking for reliable storage without the fuss of self-managing their environment. From a managerial standpoint, there are all kinds of cool bells and whistles on offer including automatic backup, replication systems as well as point-in-time recovery plus read replicas – how handy!

RDS is a powerful tool on AWS that simplifies database management for administrators, while also ensuring data remains safe and secure. At the same time, it is flexible enough to meet scalability requirements with ease – making it easy to adjust settings as needed and keep information up-to-date. Administrators can take full advantage of this by using features such as automatic backups, replication and read replicas alongside point-in-time recovery systems; providing them with everything they need to confidently manage their databases without sacrificing precious resources elsewhere in their business or organization.

Delving into the AWS Cloud Environment

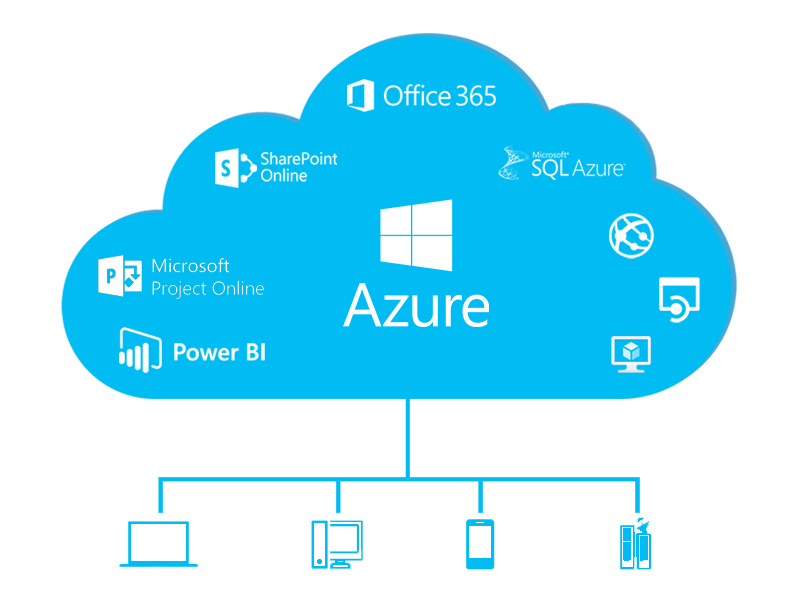

Going deeper into the AWS Cloud Environment, we need to get an understanding of what RDS in AWS stands for. Amazon Relational Database Service (RDS) is a web service that makes it much simpler to configure, manage and scale up a relational database online. With Amazon RDS you can quickly launch multiple versions of popular database engines like MariaDB, MySQL, PostgreSQL, Oracle Database and Microsoft SQL Server with hardly any difficulty at all.

The brilliant thing about Amazon RDS is that it deals with all the administration of taking care of your database, so there is no need for you to bother; including patching your database programming and automating backups and failover. This implies that all you have to do is manage the information put away inside the database; making life significantly simpler for developers and system administrators similarly. Wouldn’t it be incredible if this was done automatically? Indeed, thankfully with Amazon RDS, we don’t have anything more than just managing our data!

Amazon RDS enables high availability by mirroring your information over different Availability Zones within an AWS Region, or even across multiple Regions. This means that if anything were to happen to one of them, you wouldn’t experience any interruption in terms of data availability. To make it better, you can easily modify the computing resources connected with your databases up and down according to what your application needs are; this helps reduce expenses when workloads are light and ensure good performance during times when activity is highest.

All these features combined make Amazon RDS a powerful tool for managing cloud-based databases – providing users more power over their infrastructure while at the same time giving cost savings compared to traditional ways. Have you thought about implementing Amazon RDS? It might be worth looking into…

Understanding the Concept of Database Hosting

When it comes to database hosting, a lot of people are likely to focus on the physical equipment used for storing databases. But actually, they are thinking too narrowly – database hosting is much more than that! In this blog post, we will look at what Database Hosting really means and how Amazon Relational Database Service (RDS) can help you take full advantage of your data.

Database hosting covers various services and techs in order to facilitate creating and maintaining databases – so quite an extensive field!

When it comes to managing a database, there is plenty of stuff you need to think about. This includes setting up the infrastructure for hosting your database, handling performance optimization and making sure that data replication and backups are in order – amongst other factors such as security issues.

For instance: if you choose an AWS RDS-managed solution then you can benefit from being able to automatically provision cloud infrastructure and patch any underlying problems – cool!

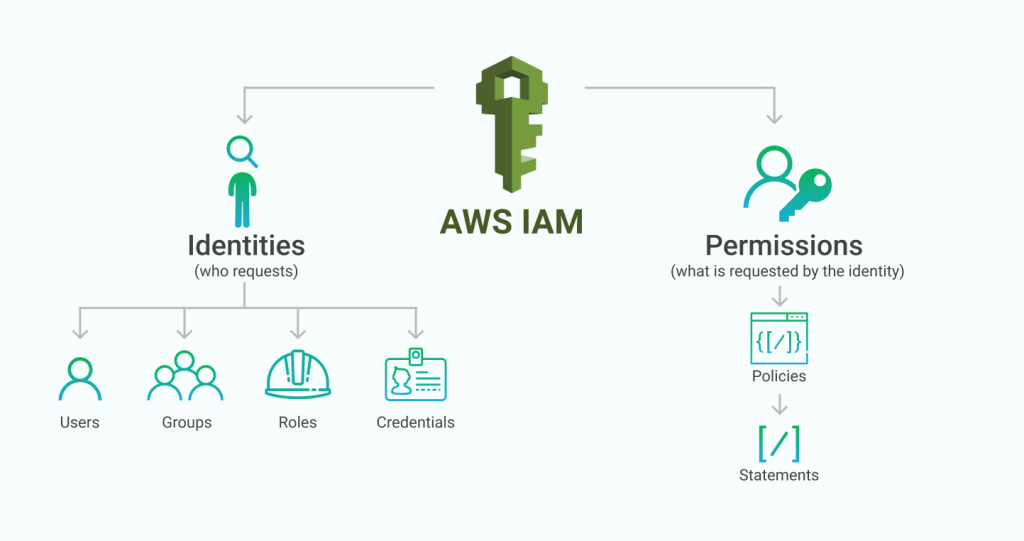

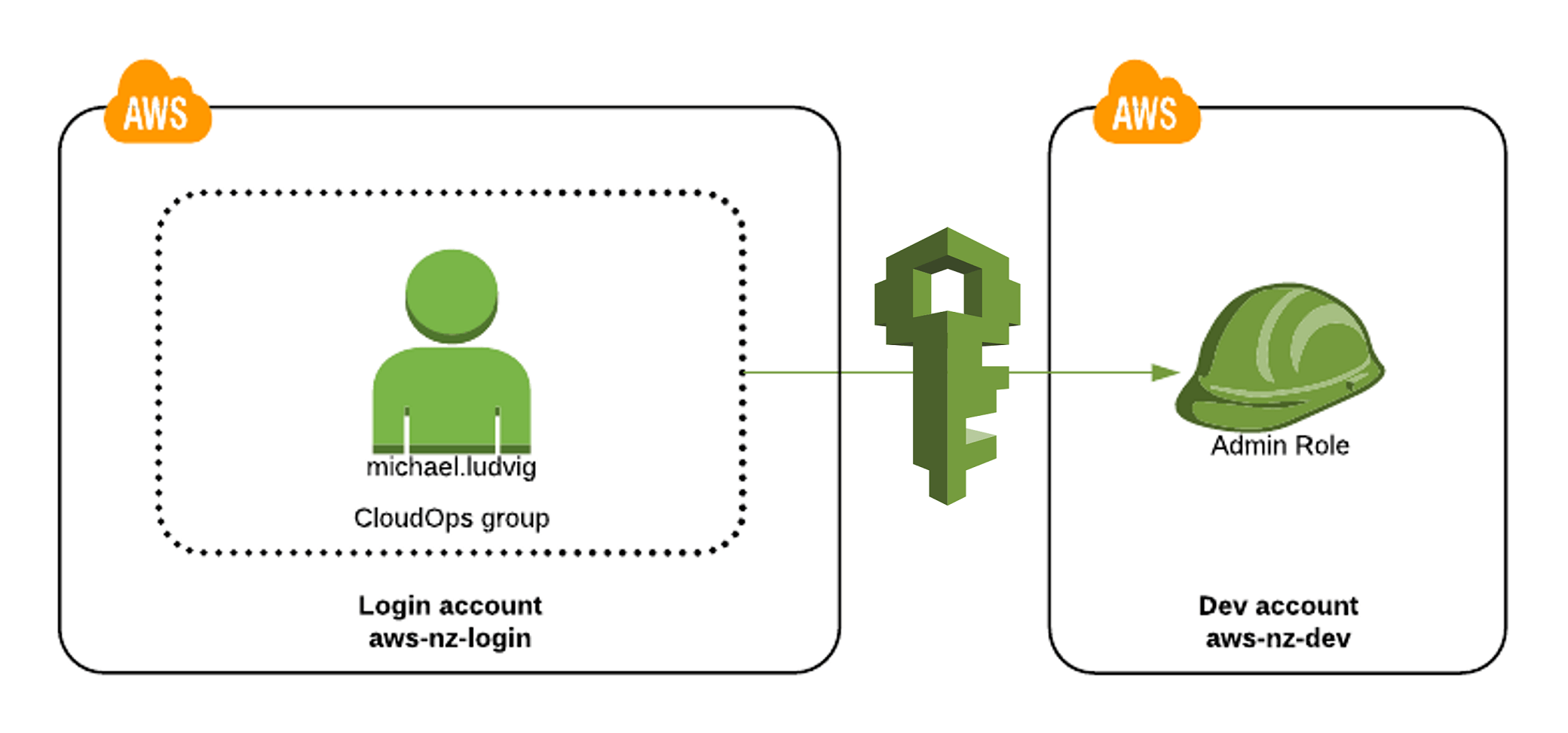

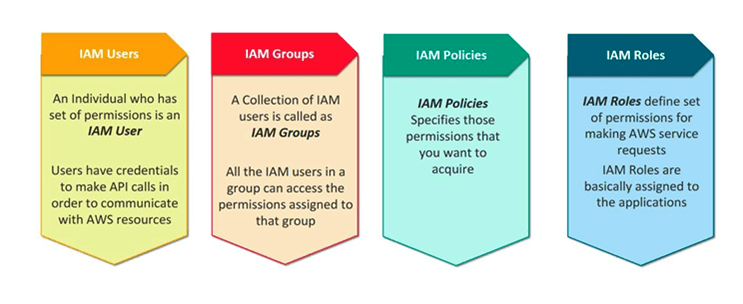

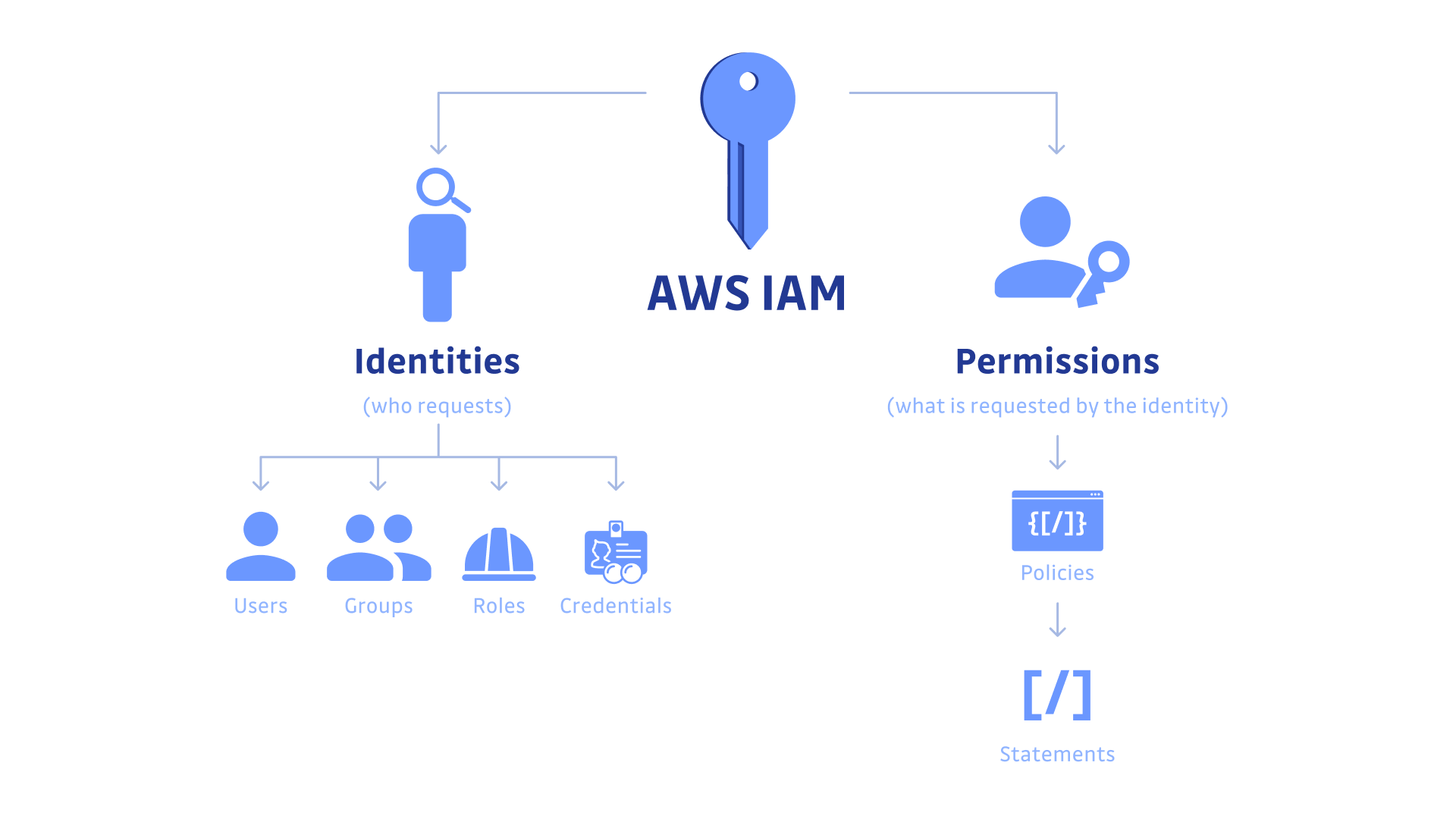

What more could you ask for? RDS has plenty of amazing benefits to offer. Automated backup, point-in-time recovery, scalability with no downtime and even encryption at rest are just some of the features that make it an ideal choice for businesses wanting a reliable cloud storage solution which keeps their valuable data secure. Not only this but Amazon also provides its customers with safe access management over their databases by using Identity Access Management (IAM) policies – what can be better than that?!

Organizations can have granular control over who is able to access their data stored on RDS instances or create new databases using IAM control policies, removing the requirement for manual management of credentials across various users. Additionally, Amazon also makes it straightforward for developers to form custom security rules employing IAM policy statements and grant permissions based upon identity type or specific user actions as opposed to blanket access permission which could put sensitive business information at risk if breached by malicious actors or unauthorized users.

To summarize; when taking advantage of AWS RDS for database hosting purposes businesses are guaranteed that their valuable data will be safely held in the cloud while experiencing all relevant benefits like automated patching and scaling features alongside robust safety controls given by IAM policies and statements. Whether you are running an extensive corporate enterprise organization or a small business newly starting out – opting in with an experienced managed service provider such as Amazon RDS might possibly turn out to be one of your smartest decisions yet!

The Role of Remote Services in AWS

Talking about cloud computing, Amazon Web Services (AWS) is one of the most widely used and feature-rich solutions out there. A key advantage it offers is its remote services abilities – making it great for providing scalable, dependable and secure access to databases in the cloud.

The AWS Remote Database Service (RDS) can be relied upon across a variety of types of databases – ranging from traditional SQL ones all the way up to NoSQL varieties like MongoDB or Cassandra. Who knew accessing information could come with such ease?

RDS makes it a breeze to set up and manage cloud databases, helping users quickly access storage resources, take control of security rules and keep an eye on performance metrics without having to do any hardware or software work locally. What’s more, RDS offers the possibility to scale up your database when needed – while you still get optimal performance with no extra effort from you!

RDS provides the remarkable ability to dynamically allocate extra storage space as and when necessary, without interrupting operations – meaning your applications will never take a hit due to resource restraints. Moreover, RDS’s encryption features and data replication capabilities provide added layers of safeguard against any security breaches or loss of information.

To top it off, automated backups with RDS can make sure you are well covered in case something goes awry – reducing manual labor while concurrently providing reliable backup strategies for vital data assets. This makes it an ideal choice for organizations that want peace of mind in their cloud investment decisions — not forgetting how convenient this is for those seeking instant database deployment with complete confidence!

Overall then, it is evident why RDS has become such a popular tool amongst AWS customers; its powerful databases allow them to gain full advantage from their cloud infrastructure whilst enjoying impressive levels of performance and security across different platforms simultaneously.

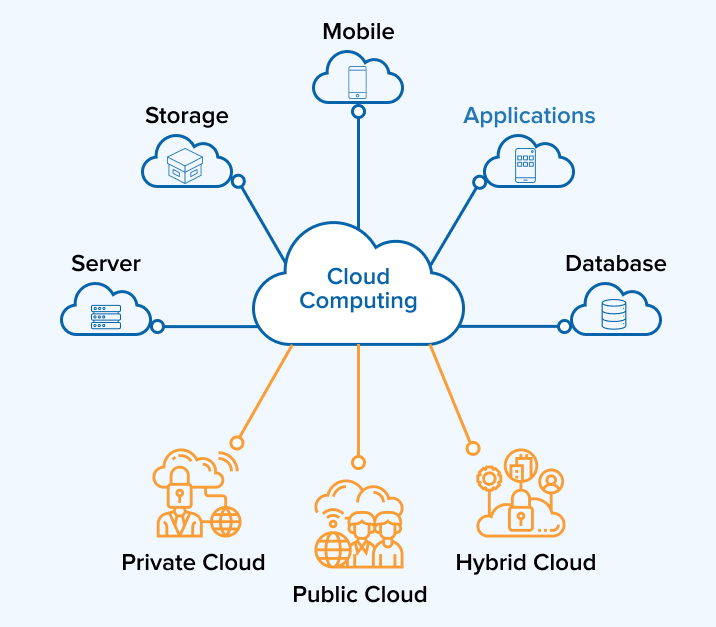

A Comprehensive Overview of Relational Databases

Cloud computing has been turning the way companies store, handle and manage their data over the past few years. Amazon Web Services (AWS) provides a wide range of services that help customers take advantage of this trend. One such service is the Amazon Relational Database Service (RDS). RDS is an AWS-managed service which makes it easier to provide for and maintain relational databases like Oracle, MySQL, PostgreSQL as well as Microsoft SQL Server. In this article, we will give you a thorough overview of what exactly RDS in AWS does plus how it can be beneficial for your business.

Let us kick things off with a definition. RDS is described as an online service to control relational databases for applications running on the AWS cloud system. Users can make, arrange, track and size up their databases in line with what they need without having to take care of the underlying infrastructure needed for database organization. As well as scalability, security and accessibility are also major objectives when it comes to using enterprise-level apps – making sure that RDS services provide these attributes is vital.

A managed service like RDS does away with the need for manual setup or configuring – an activity which can be tedious when done by hand. What’s more, there is no requirement to bother about software installations or patches meaning you don’t have to spend time and effort on maintenance to keep the system current. Best of all, through using services like RDS there is no need to fork out money for precious hardware resources as all database operations take place within a virtual machine inside an Amazon EC2 instance – this means customers only pay based on what they use instead of purchasing expensive equipment at once-off cost.

When it comes to features, RDS has a few offerings that really make it an attractive choice for businesses wanting an easy-to-manage solution for their database needs. These include multi-AZ deployments which provide high availability through replication across multiple Availability Zones; automated backups making sure your data is regularly backed up; read replicas providing scalability; point-in-time restore enabling customers to rollback their databases in case of disasters – and encryption capabilities offering protection on the data as well as secure access control within and outside your network perimeter among other features offered by RDS at AWS.

Having said this, there are plenty of perks when using RDS from AWS including lower cost of ownership while also being able to work flexibly with managing your database infrastructure. What’s more, you can also benefit from the ease associated with setting things up!

How AWS RDS Streamlines Database Management

Cloud Computing is fast becoming the ‘go to’ for companies of all sizes. Infrastructure costs are plummeting and firms can quickly get their hands on cloud services that grant scalability and versatility. Amazon Web Services (AWS) offers a selection of amenities, one being its Relational Database Service (RDS). RDS makes database management easier – you’re able to set up relational databases without needing to concern yourself with keeping an eye on infrastructure or going through complex configuration stages.

By using AWS RDS, establishing and looking after your database gets less bothersome since this platform takes away much if not most of the hassle typically associated with running a DB in-house!

Using Amazon Web Services Relational Database Service (AWS RDS) can make setting up relational database instances really easy. You don’t even have to think about hardware resources or lots of complicated configuration; AWS RDS looks after all that for you automatically – so what’s not to like? Plus it will save you precious time by automating maintenance tasks such as patching and backing-up, along with giving simple scaling options which can be sorted out just a few clicks away. Sounds convenient right?

With AWS RDS, businesses can quickly add resources or reduce costs without disrupting applications when demand fluctuates. This makes it easier for them to remain agile and adjust their use of the cloud according to their requirements. What’s more, there are great monitoring capabilities built in which allow users to keep an eye on data performance in real-time – think CPU utilization or disk space usage – so they’re able to spot any issues swiftly and make sure things stay running perfectly. If something does go wrong though then there is plenty of help at hand; administrators have various tools available that enable them to tackle problems head-on with minimal fuss.

All things considered, AWS RDS has been a game changer: it simplified how organizations store and manage databases whilst also giving full control over operations! It means big companies as well as fledgling startups don’t need server setup skills nor do they require database configuration expertise – instead all efforts can be focused on delivering brilliant customer experiences every single time around.

The Impact of AWS RDS on Business Operations

Using AWS RDS can help businesses to increase their operational performance in a secure and cost-effective manner. It offers features such as high availability, scalability, backup and recovery and encryption, so organizations are able to use multiple sources of data securely. This has a huge impact on day-to-day operations: it means tasks like database management become much more efficient – especially when compared with traditional methods that rely solely on manual intervention. This improved efficiency also brings the added benefit of greater data security for companies using AWS RDS than ones who don’t. So how does access to these advanced tools really affect business?

Amazon Relational Database Service (AWS RDS) makes large-scale data storage and easy access via SQL query services a breeze. No more manually editing code or working through complicated structures – with AWS RD, teams can make updates to tables or databases quickly without having to think too hard about it. The scalability options mean companies have the freedom to size up their capacity for storage and computing power as necessary; no need to worry that you won’t be able to meet your needs in terms of performance expectations. What’s more, Amazon ensures total reliability so you can trust any information stored is secure at all times!

With this ability, companies are able to get a better handle on their resource needs while making sure they have the right balance of resources at any given time. What’s more, AWS RDS gives extra security options like AWS PrivateLink which lets customers securely link up instances across multiple VPCs (Virtual Private Clouds). Underground that has built-in encryption features as well; helping customers protect confidential data from unauthorized people and theft.

By combining these primary safety measures with other third-party solutions such as WAF (Web Application Firewall), organizations can make sure everything is kept safe from cyber criminals whilst following relevant industry guidelines. All told then, there is no doubt that AWS RDS is an asset when you are looking to smooth out business operations by sorting your database management systems out properly, allocating resources during peak times or providing enhanced security layers.

When do you add in the financial rewards associated with this service – namely reduced costs for infrastructure maintenance – plus its capacity for bumping up efficiency levels along the way? Then few would argue against choosing the Amazon Web Services Relational Database Solutions option!

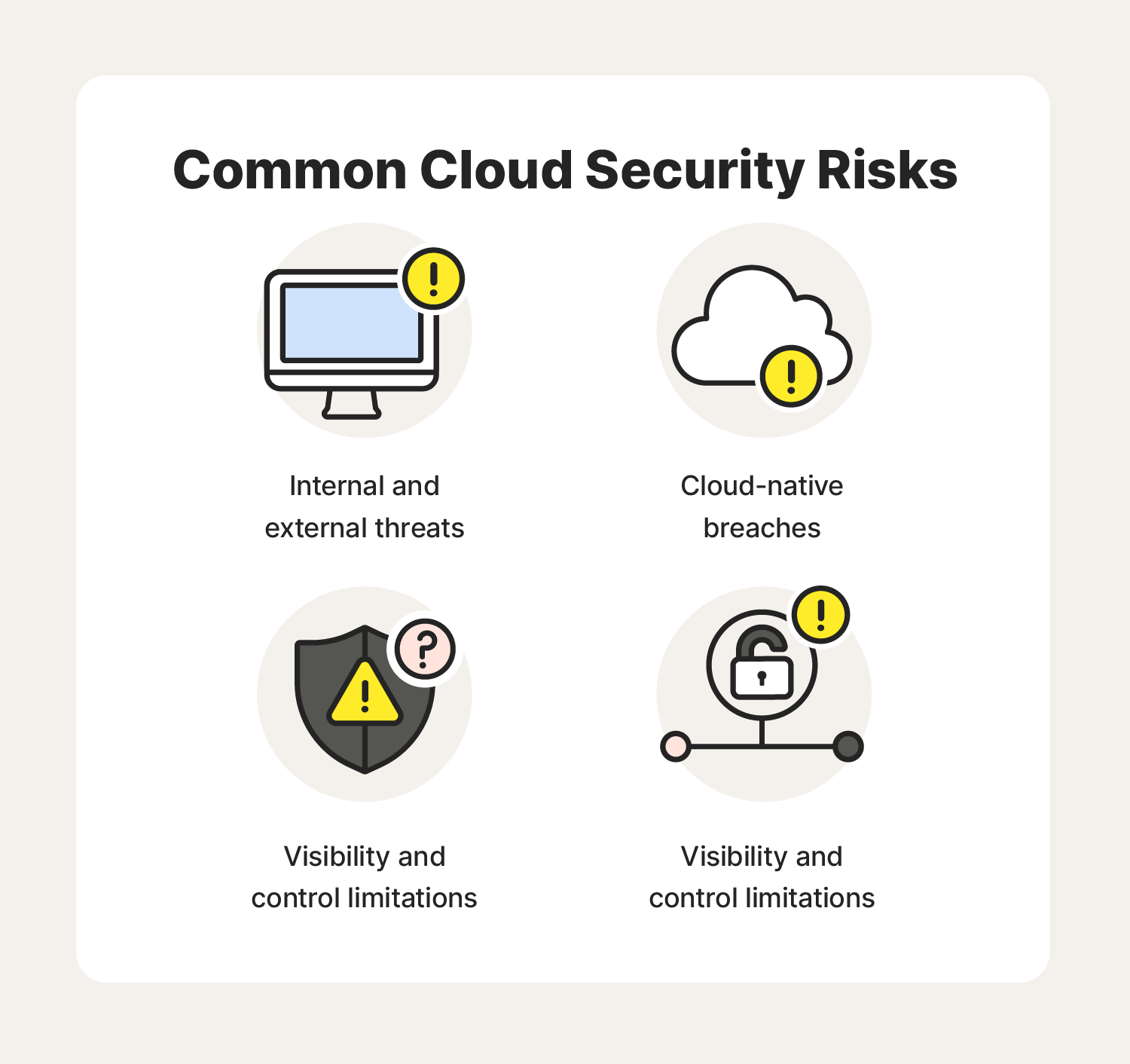

Essential Security Features in AWS RDS

If you are a business owner that hosts on the cloud, security is one of your top priorities. Amazon Web Services (AWS) Relational Database Service (RDS) provides several features to make sure your database stays secure. So what essential safety measures should you be aware of? Encryption plays an integral role in protecting databases. AWS RDS offers different encryption choices as well as thorough control over the keys used for encryption so it is up to you how much protection do you want to ensure for your business data and processes – making this decision can sometimes be challenging but also very beneficial!

Once configured, encryption can be enabled or disabled whenever you want and all data stored in the database will stay encrypted even if it is backed up and restored. Another way that AWS RDS keeps databases secure is through identity and access management (IAM). IAM allows you to make users with various levels of access to your databases which eliminates potential risks like unauthorized entry. You also have total control over who has permission to get into your databases as well as what they are allowed to do with that info – so no surprises there!

You can also set rules on how long user logins are valid, so you get extra security when it comes to handling user accounts. Amazon RDS’s Multi-AZ deployment feature helps offer high availability and disaster recovery capabilities by copying your data across multiple Availability Zones (AZs). This replication makes sure that if one AZ goes offline, the info in other zones will stay accessible and current. If businesses need further protection measures, this duplication acts as a safeguard against any information being lost due to hardware issues or prolonged power cuts.

AWS RDS also makes use of advanced intrusion detection and prevention systems that can detect malicious activity on databases in real-time, taking immediate action when necessary. These systems help protect your data by spotting any suspicious behavior such as brute force attempts or unauthorized SQL commands; this way administrators are alerted so they can implement necessary countermeasures at once for maximum efficiency.

In addition to all these features, AWS RDS provides a powerful log system which documents every interaction with the service – authentication details, queries posed and even changes made to database configurations included! This allows admins an easy and clear overview of who has accessed the system (where from), what actions have been taken while logged in etc., giving them great insight into possible threats before it is too late – making security posture sturdier than ever for businesses relying on AWS RDS services.

Comparing RDS AWS with Other Database Services

When it comes to picking out the perfect database service for your needs, you might find yourself comparing RDS AWS with other options. Relational Database Service (RDS), offered by Amazon Web Services (AWS) is a cloud-based database system. You can use this technology to save and keep track of relational databases in the cloud benefiting from advanced features such as high availability, scalability or even security. When weighing different database services against each other there are some key dissimilarities between an RDS AWS service and others – what makes them stand out?

A major distinction between RDS AWS and other services is that it can handle huge quantities of data. With RDS AWS you can create instances which are capable of storing up to 10TB, whereas the same cannot be said for most others as they may only offer a capacity of 1-2 TB. Therefore, this makes using AWS much more sensible should your application involve keeping large amounts of information safe. Additionally, its cloud hosting allows easier upsizing or downsizing than alternative onsite solutions – making scaling simple!

A significant contrast between RDS AWS and its rivals is the backing for various database motors. While most administrations just offer a solitary motor alternative (for example MySQL or PostgreSQL), RDS AWS bolsters different merchants including Oracle Database, Microsoft SQL Server and Amazon Aurora – giving you the adaptability to pick the engine that best addresses your issues. With this assortment of choices accessible, you have more authority over how your information is put away and overseen on the cloud stage.

Finally, while looking at different data sets, administrations consider their security highlights also. Security is especially essential when managing delicate client data or money-related information kept in the cloud – here once more, RDS AWS emerges from its opponents with all databases made through this administration being ensured by encryption out traveling every which way just as staying according to additional insurance against malevolent assaults like programmers or information burglary Furthermore IAM roles(Identity Access Management) what’s more Multi-factor Authentication support for clients inside an association making them increasingly secure than any other time in recent memory!

Future Prospects for RDS in the AWS Ecosystem

RDS on AWS offers a super reliable, adaptable and cost-efficient way of managing relational databases in the cloud. It is an efficient solution for both small and large data sets, with the capability to support multiple database engines such as MySQL, Oracle, Microsoft SQL Server and PostgreSQL. Utilizing RDS on AWS makes it possible to swiftly construct a database environment without much effort – you don’t have to stress over keeping hardware or procuring software since that is managed by Amazon’s cloud platform. So looks like there is plenty of positive potential ahead for RDS within the AWS ecosystem!

AWS has already plowed a lot of money into their cloud-based relational database services, and they are going to continue investing in them as more organizations switch over to their cloud solutions. One of the most exciting implications is that you will now be able to automatically scale up or down depending on your application needs – no need for manual input when busy periods come along! This makes things much simpler for users, letting them keep their applications running smoothly without having to pay out too much or extra resources. How awesome would it be if there was always enough capacity with none of the financial strain?

What’s more, it appears Amazon’s NoSQL offerings are growing in popularity as a viable option to the traditional relational databases for those needing extra flexibility when sorting their data sets. To top that off, RDS on AWS gives users access to advanced analytics tools which drastically simplify uncovering valuable information from inside their data sets.

With these tools there is no need to waste hours manually sifting through piles of numbers – trends and patterns can be easily identified right away! And with Amazon’s cloud infrastructure providing unlimited scalability you won’t have trouble expanding your datasets without having to outsource further resources.

Wrapping Up!

To conclude, Amazon RDS is a cloud-administered administration from AWS that gives a protected and dependable stage to have relational databases. It decreases the multifaceted nature of dealing with remote administrations, database hosting, and different tasks; thereby freeing up assets for other significant assignments. Furthermore, with the adaptability it offers as far as scaling ability, accessibility zones storage limit more clients can tweak their databases according to their own necessities.

Are you keen to skyrocket your career? Then why not sign up for our AWS Cloud Security Master Program today? You will learn how to create and maintain secure cloud applications, as well as have access to qualified instructors who will provide the support and guidance that is essential. Learning from experienced professionals in this rapidly developing field can make all the difference when it comes to getting ahead of the competition – so don’t hesitate; join us now for a chance to obtain an AWS Certified Professional badge and become one step closer towards success!

Happy Learning!