Azure Functions offers a powerful, cloud-based solution that helps users create and deploy applications quickly. This technology makes use of the advantages offered by both cloud computing and serverless computing to let developers build complex software solutions in significantly less time than traditional methods require. By combining Azure Cloud Services with its own functionality, developers can develop apps without worrying about what’s happening on the underlying servers – resulting in scalability and low latency for their creations!

So if you are looking for an effective way to produce efficient results from your app development efforts, exploring how Azure Functions works might be worth considering.

Understanding the Basics of Azure Functions

Azure Functions is a cloud service from Microsoft that enables developers to run code without having to take care of the underlying infrastructure. It is great for small-scale jobs, allowing to make event-driven programs easily and rapidly. Azure Functions are based on the same technology as Azure App Service but with added features like scalability, triggers and bindings – offering them suitable for applications in Clouds requiring quick response times or regular updates.

When you are thinking if it would be wise to use Azure Functions then it is crucial to comprehend its components first. What kind of operations do you need? How much time can they require? Are there any specific requirements related to scaling ability etc.? These questions may help you decide whether this particular type of solution suits best your needs!

- First off, there are triggers – like HTTP requests, timers and queue messages which can be used to kick things off.

- Secondly, we have bindings – parameters that define the data available whenever a function is invoked via an input or output binding.

- Then, of course, there is the main event itself: the code being run when its trigger occurs or a binding parameter has been set up.

- Last but not least comes resource limitations – determined by allocating compute resources as each function executes and setting restrictions around how many times they can be called in any given period of time. It is almost like your own mini-computer ecosystem within one neat package!

Azure Functions offers developers the chance to focus on their application logic while at the same time taking advantage of Microsoft’s cloud platform. There is support for a range of languages like .NET Core, Java 8/9/10/11/12/13+, JavaScript 6+ (Node + Express), Python 2+ / 3+, C# Scripts (CSX files), Power Shell version 6 scripts (ps1 files) and C# compilation too (.csx). It means anyone can easily start creating functions without needing considerable experience or knowledge in coding. What’s more, with ‘Function Composition’ by Microsoft you are able to combine many different language elements into one program!

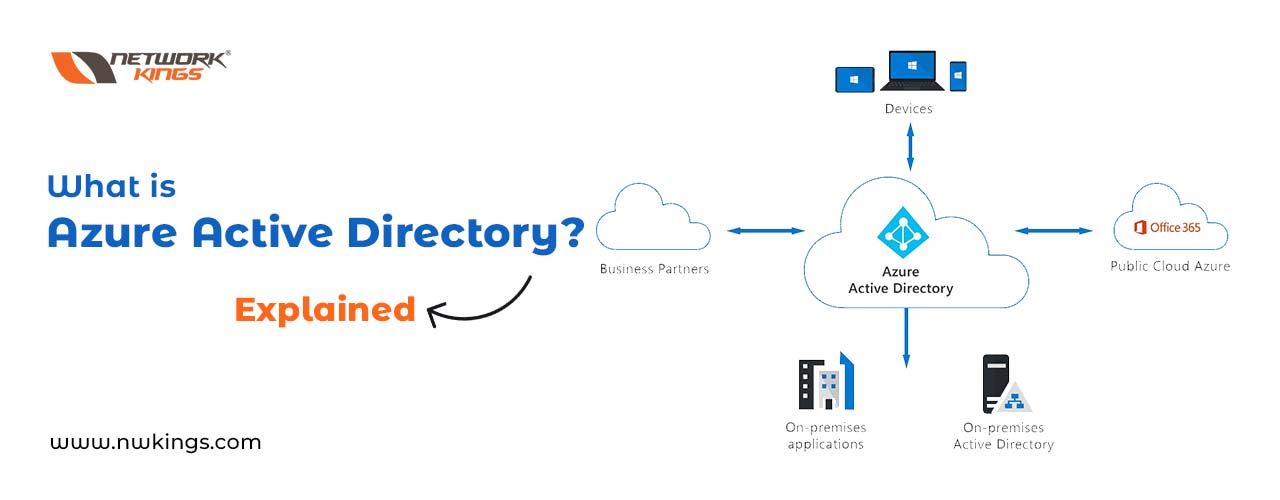

This enables code composed in different languages to work together, permitting increasingly complex tasks to be done more productively. Furthermore, Azure Functions also offer features such as Authentication and Authorization through integration with Active Directory services and Blob Storage support for holding log files and other pieces used by your application. What’s more astounding is that it does all this while keeping things secure!

Azure Functions boasts an impressive array of features that make it a prime choice for cloud-native applications on Microsoft’s platform. With API Management Services, you can use powerful tools to monitor usage patterns and smoothly move data between apps; Durable Entities let you set up stateful serverless computing situations; Cognitive Services put AI models at your fingertips with APIs; Machine Learning algorithms leverage deep learning (AI); Batch Processing offers auto-scaling straight out the box plus integrations with DevOps tools and Docker Containers provide yet more possibilities. It even supports Serverless Architectures and Microservices Design Patterns – not forgetting Custom Connectors which give developers quick access to third-party services such as Salesforce or Slack without having to write any code!

In short, these key components enable less techy users to get their job done faster and easier – focusing solely on core functionality rather than managing infrastructure or worrying about scalability issues.

The Role and Benefits of Azure Functions in Cloud Computing

Using Azure Functions for cloud computing has a few clear benefits. Not only can you save money compared to running your own machines, but it offers scalability and ease of use too. You will be able to deploy code quickly across different regions simultaneously – whether that is one instance or many instances at once! As well as this, the functions are triggered by events or timers so they can run in either synchronous or asynchronous mode; enabling you to scale up and down depending on what suits your needs best. Plus with no need to manage infrastructure yourself any more, there’s less hassle involved overall!

Given the cost-effective benefits and powerful features that Azure Functions provide, it is no wonder it is such a popular platform. Managing applications across their entire lifecycle can be made incredibly easy with this cloud service from Microsoft – I mean come on; you don’t have to worry about setting up operating systems or virtual machines! All of these are taken care of by Azure Functions so developers just need to focus on the coding and they are good to go. And let us not forget triggered functions like message queues, blob storage, event grids etc., all coming as part of the package without any extra effort required. It certainly makes sense why so many turn towards this solution for running their workloads efficiently!

Using Azure Functions makes life easy for developers and businesses alike. It cuts down on time spent setting up services from the ground up, saving effort and reducing complexity when dealing with various components of an application architecture. Applications built on top of this platform also reap additional benefits such as autoscaling based on usage requirements, and advanced analytics to provide insights into important performance metrics related to your app’s well-being and fault tolerance – meaning it will still work even if one component goes wrong!

Plus, what sets it apart is its integration with popular development frameworks like Nodejs or .NET Core – so now you can use already familiar technologies to build sophisticated applications in a fraction of the original time that would have been needed.

To sum things up: there are many advantages that come along with using AzureFunctions instead of traditional cloud computing environments or other serverless solutions out there; less setup hassle means more energy focused towards bringing applications online faster while potentially cutting costs at the same time. All in all, they are great options for companies looking for cost-effective yet powerful ways to run their apps without breaking a sweat!

Diving Deeper: The Anatomy of Azure Functions

Azure functions are an amazing way to get maximum bang for your buck with minimal effort. They provide the freedom to create and execute code in the cloud without having to worry about setting up a server or other such infrastructure requirements.

However, due to their immense power and flexibility comes complexity – deploying Azure Functions requires configuring them correctly as well as managing them effectively; all this needs a deep understanding of how it works. In this article, we will try our best to take you from not knowing what they are at all, right up to becoming familiar enough that working with Azure Functions becomes second nature!

Right, let us start with what an Azure Function is. Basically speaking it is a bit of code that runs on Microsoft’s cloud computing platform and can be written in various languages (JavaScript, C# or Java to name but a few). It will work just the same way whether you use Windows or Linux as your operating system. What makes them so awesome is they are triggered by certain events like an HTTP request or when changes happen in the database – this stops us from having to write lots of extra codes for things such as calling APIs and running scheduled jobs. Now onto deploying an Azure Function: how do we get started?

This part is simple but important – mess it up and your function won’t work! There are two ways you can deploy an Azure Function: through the Azure Portal, or using Visual Studio Code in combination with GitHub for version control and deployment tracking. What is key here though is that you need to specify which language you want your function written in as well as what sort of versioning; this makes sure there are no compatibility issues between dependencies and triggers expected behaviour when updates or releases occur. How easy would things be if we could just write code without thinking about its technical implications?!

Now that we have looked at the anatomy of Azure Functions, let us look into triggers and bindings. Triggers are what allow us to define when our function should run – every day at a certain time or in response to messages from services like Slack Twitter, etc. Once these have been set up, your functions will be all ready for use; waiting eagerly for input data so it can process it according to whatever logic you have included!

When it comes to making sure everything runs smoothly, bindings allow you to define output locations – such as databases – which can then be used for further processing inside other functions or applications. These settings let you customize how your function operates in order that it responds quickly but does not overwhelm downstream services with too many requests all at once! Is there a better way of ensuring the smooth workings of whatever system we are dealing with?

Ultimately, security is an issue with Azure Functions; you can restrict access by attributing particular roles to users/groups (Reader/Contributor/Owner), and ensure any confidential data stored on external services like databases is encrypted accordingly so no one but the authorized persons have sight of it.

Even if we don’t demand a server for our functions there are still logging features, that must be inspected periodically in case of malicious activities as well as general performance info such as how often functions are called etc..

All these considerations keep your data secure while helping establish real knowledge about what happens when your functionality comes under varying circumstances – this kind of understanding is essential when striving to solve problems or refine efficiency!

Comparing Azure Functions with Other Cloud Services

When it comes to choosing the best cloud service, cost is always an important factor. But when you compare Azure Functions with other available options, what do you get for your money? Well, Azure Functions offers a range of services that outshines many competitors – from scalability and security to pricing models tailored specifically for serverless architectures. So, in terms of sheer value-for-money, if budget isn’t too much of an issue then it is definitely worth taking a look at what they have on offer.

But there are other factors besides price that need to be considered when exploring how well different cloud providers measure up against each other – such as reliability and performance capabilities. When using any provider these will typically depend heavily upon its underlying infrastructure platforms so, looking closely at their architecture can give insights into the guarantee expectations users should have regarding uptime or latency levels etcetera.

In this regard, again Azure functions stand head and shoulders above most in terms of stability having invested heavily over several years now into building one platform which supports applications across all regions globally – something which has seen them rated highly amongst major public cloud peers like AWS Lambda etcetera. It certainly pays off here!

Finally, we come back around full circle onto availability where once more given Microsoft’s large international presence customers benefit from high-level support no matter whereabouts they’re located geographically speaking plus 24/7 technical assistance delivered by industry-certified experts.. All features taken together make opting for Azure Function mean less risk because any issues occurring would be mitigated quickly– giving peace of mind to investors investing big bucks!

Generally speaking, Azure Functions offer a more cost-effective solution compared to other services. The main reason behind this is that they use pre-configured virtual machines which take up less time and money when it comes to setup and maintenance costs, while also being designed specifically for hosting functions so you don’t have to worry about wasting funds on additional resources such as storage or compute power.

Moreover, there is also the scalability factor of using Azure Functions that makes it all worth your while. Going with traditional models usually means having manual intervention in order scale; difficult task at best, but one made even harder by just how time-consuming it can be! Although there are some great alternatives when searching for a Cloud Services provider, if you are interested in affordability, scalability and security then it is hard to ignore the advantages of Azure Functions.

Not only is it cost-effective but its automatic scaling capabilities mean your application can handle increased demand without needing manual intervention from you – perfect for apps that need large amounts of computing power with short bursts! On top of all this though, they offer plenty in terms of authentication & authorization support as well as encryption; so whatever malicious activity might be out there shouldn’t come close to your data or applications. What more could anyone ask for?

Azure Cloud: The Perfect Home for Azure Functions

Azure Cloud is a brilliant platform for hosting Azure Functions thanks to all the features and services it has on offer; making running applications and handling data an absolute doddle! With its trustworthy scalability, extensive security measures and a broad range of tools offered – Azure Cloud provides the perfect setting for Azure Function. The scalability of this cloud makes adjusting your resources so easy that you will be able to guarantee your app’s responsiveness no matter how high traffic gets. But can you sustain such responsive levels when lots more visitors are added?

Going to the cloud can be a great way of speeding up deployment time and with Azure Cloud it’s even easier. This means you don’t have to worry about managing multiple, separate servers or clusters when setting up new applications – all that overhead is made redundant! What this boils down to for businesses operating in highly competitive markets where every millisecond counts is an incredibly smooth experience without sacrificing security: through its advanced authentication systems and encryption protocols your data is always perfectly secure from unauthorized access as well as potential cyber threats. So if you are looking for speed, reliability and peace of mind, then look no further than Azure Cloud!

Having your data stored securely in the cloud means that you can rest assured no matter what happens on-premises. Azure Cloud offers a wealth of tools perfect for deploying, monitoring and debugging functions – whether you’re working remotely or onsite. Monitoring performance metrics such as uptime and response time is made easy with these tools and further insights into CPU utilization or memory usage are accessible to ensure everything runs smoothly all the time!

Plus, best of all, they are extremely user-friendly so developers can get their functions up and running almost instantly without any issues whatsoever. All in all, hosting apps with Azure Cloud comes complete with features ideal for managing Functions; scalability coupled with security measures plus tooling options provide an effortless experience when it comes to azure related tasks – how good does that sound?

Practical Applications of Azure Functions in Business

Azure functions are gaining popularity in the business community and it is easy to see why. They offer maximum flexibility at minimal cost, making them a great serverless computing solution. Companies across many sectors can make use of Azure Functions to save money and increase efficiency – there are countless potential scenarios where they come into play! Taking automation of mundane tasks as an example – this would require manual effort otherwise but with Azure Functions developers don’t have to bother about these things, allowing businesses extra time for more important projects.

Azure Functions makes it much simpler to launch complex projects without the hassle of crafting code from nothing. It has also been found handy in data processing; companies use their serverless platform for creating and testing out their pipelines, without having to keep up with virtual machines or servers, saving them some operational costs and allowing more time for drawing significant insights from datasets faster than ever before.

Further uses involve forming microservices architectures completely using Azure Functions as well as performing machine learning processes that relate to research analyses.

Azure Functions enable companies to make the most of cloud technology without having to splash out on pricey infrastructure or recruit extra staff just to keep it going. All this gives businesses an advantage over their rivals who don’t use Azure, and that could be a huge aid in the long term.

In today’s tech-crazy world, there are masses of possible uses for Azure Functions – regardless if you are a big business or a small one. Automating repetitive tasks? No problem! Processing data? That too! Even creating whole microservice architectures – yep, Microsoft’s serverless computing solution can help with all these things and more; so no matter what line your company is in, it would definitely be wise not to ignore what this powerful tool has to offer.

Demystifying Serverless Computing with Azure Functions

Serverless computing is really taking off in the cloud computing world, and with Azure Functions it is a doddle to get your project up and running. No more worrying about complex servers or networks – Azure Functions takes all that hassle away from you by providing an uncomplicated way of developing powerful applications quickly. It means goodbye to those laborious server-side development processes, freeing you up for other tasks!

When it comes to Azure Functions, you can create what are known as ‘functions’. These set off a response when they’re triggered by an event or timer – such as someone accessing your website, receiving an email, uploading something onto Dropbox or buying something from an online shopping cart. In turn, this causes the code associated with that function to be executed by Azure – and this could range from simple automated tasks like sending out emails or running data analysis scripts to more complex projects like web services and machine learning models.

It is fascinating how technology is evolving in order for us all to benefit! Compared to traditional server-side applications, where developers have to constantly update their code in line with the newest technologies, Azure Functions offers a far more sustainable solution. Rather than dealing with large-scale issues such as system upkeep and expensive upgrades by creating functions rather than massive apps; developers just need to modify small components of their application.

What’s more, the scalability provided through Azure Functions makes it cost-effective for companies of all sizes. How awesome is that? With only the code running each time a function is triggered, costs stay low even if usage takes off unexpectedly due to greater demand for particular services. Developers can scale up their functions in seconds when needed – not needing to buy any extra hardware or install software updates.

Azure Functions also offers different types of scripting languages like C#, NodeJS and Python so that developers have access to the language most proper for their project needs. Microsoft likewise provides tools such as VS Code which makes it easy for programmers who are already familiar with coding SQL Server Management Studio (SSMS), Visual Studio (VS) or other development environments to get used quickly to new features available through Azure Function’s service offerings.

Altogether, when looking for a cloud computing platform that presents simplicity yet scalability and cost performance without forfeiting flexibility – Azure Functions should be at the topmost spot on your list! Could this really provide you with all you need? The answer might just surprise you.

The Cost-effectiveness of Azure Functions in Cloud Services

For businesses that want to make the most of their money and use cloud services effectively, Azure Functions are perfect. Whether it be for web apps or machine learning models, you can utilise serverless computing resources with an Azure Function – plus save lots compared to using a dedicated server set-up. The cost varies depending on how much memory, disk space and processing power you need; but there will still be considerable savings regardless! What’s more, your business gets all this without the headache of complex hardware management.

Additionally, with Azure Functions you only pay for what you use – unlike having to shell out a fixed amount no matter how much or little you utilise. This makes it one of the most cost-effective solutions when it comes to cloud computing. But that’s not all; there is so much more! No need to fuss about setting up complex systems or worrying if your solution will be able to cope as your user base expands – because scaling with Azure Functions is automatic meaning resources are always available whatever demand may arise. How great!

Using Azure Functions comes with a great deal of flexibility. This means you can easily integrate other services like storage and databases, utilising pre-existing ones without having to build from the ground up – saving both time and money in the long run. What’s more, it also has its own built-in solutions for things like logging and API gateway which helps reduce your costs further still!

Plus, if that wasn’t enough there are plenty of programming language options available too including JavaScript, C#, Python Java PHP and PowerShell giving businesses freedom when choosing what tech stack best suits their needs without ballooning capital expenditure.

How Azure Functions Facilitate Scalability in Cloud Computing?

Azure Functions are a recent addition to the Microsoft Azure portfolio of services, allowing developers to craft and deploy custom software solutions easily in the cloud. This makes scalability simpler for these devs – they can create and supervise programs that develop with their business requirements thanks to features like availability sets, auto-scaling or other serverless architectures offered by Azure Functions. These functions give businesses the freedom required if they need to scale up or down at any time – how handy is that?

What does Azure Functions provide? It provides an event-driven programming model that enables developers to create applications which respond to external events such as changes in temperature, customer orders or stock market fluctuations. This basically means the apps can handle any situation even if system resources are limited – like bandwidth and RAM – so they remain responsive when demand alters with workloads increasing or decreasing.

Plus, there are also built-in monitoring tools helping developers maintain application performance plus scalability too!

With advanced logging abilities and straightforward access to metrics like CPU utilization or I/O usage, developers can easily identify probable issues when scaling their applications in the cloud. By combining burly monitoring tools with automated scaling functions supplied by Azure Functions businesses can feel confident that their apps will stay dependable regardless of ever-changing conditions.

In a nutshell, Azure Functions offer an extensive array of features intended for scalability in cloud computing environments. From event-driven architecture to automated scaling capabilities and beefed-up monitoring tools – Azure has got companies covered supplying them with all the essential resources needed for creating reliable cloud solutions which are able to ramp up as well as slack off without interruption or being offline.

Future Trends: The Evolution of Azure Functions and Serverless Computing

For some years now, serverless computing has been about – and Microsoft’s Azure Functions have raised the bar. With an emphasis on building cloud-based applications based on serverless technology, developers and businesses are given a level of efficiency and scalability that is hard to beat. Azure Functions provides coders with a super simple way of creating apps through utilising serverless design – all courtesy of Microsoft! But what does this mean for you? How can your business benefit from deploying such techniques?

Serverless computing is a fresh type of computing model where the developer codes in the cloud and doesn’t have to manage server instances or provisioning software components – they can just concentrate on writing code.

To put it simply, it eliminates all the bother involved with managing underlying software resources such as servers and databases. No more worries about dealing with hardware! So how does this actually work?

Well, instead of running your own web server you are essentially outsourcing these operations to third-party services like Amazon Web Services (AWS), Microsoft Azure etc., giving them responsibility for hosting your applications and ensuring availability at scale. This way developers don’t have to worry about scalability – since there is no longer any need to configure virtualised infrastructure – so they are free to focus completely on developing features rather than worrying over server maintenance tasks or other complexities related to deployment processes which would traditionally require manual intervention from an IT team member.

In addition, Serverless Computing also offers significant cost savings; because users only pay for what gets used – meaning when their apps go idle during quieter periods then costs really go down too!

The great thing about Azure Functions is that it enables you to create robust applications while not having to worry about any infrastructure or hardware – all the hard work can be left up to Microsoft if your app requires cloud hosting. It means don’t have to panic when things become hectic since Azure will take care of scaling up and down for you! Plus, using microservices architecture as its base makes them inherently fault tolerant too – which is pretty awesome!

Cutting out the traditional headaches linked to setting up big applications such as downtime and data loss is one of the main advantages of using Azure functions. What’s more, writing them in JavaScript or Python makes debugging and making modifications a breeze – no need for complex configuration management scripts if you just want extra flexibility or features than what Microsoft give you by default! You can spice things up further with custom-written scripts that get deployed as functions instead.

Finally, companies don’t need to purchase costly licenses or set up intricate IT environments when they use Azure functions for writing applications as no hardware is required – this results in considerable cost savings over time. Microsoft’s offering of Azure Functions presents a desirable solution to create sound apps fast and conveniently with limited expenditure.

With today’s rapid advancement towards sophisticated serverless architectures like that provided by Azure Functions, it looks likely more organisations will capitalise on the power of such technology platforms in order to reach their objectives faster than before ever! Have you thought about how your business could benefit from using an advanced cloud-based architecture?

Wrapping Up!

In conclusion, Azure Functions is a remarkable addition to the cloud computing world. It provides developers with both serverless capabilities and an abundance of choices for creating applications on Azure’s cloud platform. This combination makes it possible to rapidly develop robust apps in an efficient way that also keeps security measures intact, as well as being cost-effective. No wonder then this technology has become increasingly popular among app creators so quickly!

Fancy mastering the newest Azure Cloud Security technologies? Then sign up for our Azure Cloud Security Master Program and become certified in no time! This course is all you need to learn security measures from scratch, as well as advanced concepts. You will be adept at Identity Protection, Data Encryption, Network Security plus Disaster Recovery once finished.

Plus there are hands-on workshops and supervised lab exercises that will help ensure a comprehensive understanding of each topic. After completing this program you will receive a respected industry certification – showcasing your skillset on the job market straight away! So don’t wait any longer; get enrolled on our Azure Cloud Security Master Programme now to give yourself an advantage over others in this field.

Are you wanting to broaden your understanding and skills in Cloud Security? If the answer is yes, then our Azure Cloud Security Master Program should be perfect for you. We will provide everything that is needed to become an accredited cloud security expert; from industry-leading tools and practices delivered by heavily experienced professionals – offering a well-rounded way of staying one step ahead!

Plus, we offer customised payment plans with top-rate support. So don’t wait around – enrol now and join our exclusive circle of cloud safety masters! Aren’t you curious about what this could do for your career prospects? Don’t miss out on the opportunity – get involved right away!

Happy Learning!