In the ever-evolving landscape of Information Technology (IT), one term has been gaining more attention and importance with each passing day: Artificial Intelligence (AI). The fusion of AI and IT has been transformative, offering innovative solutions, optimizing processes, and ushering in a new era of technological advancement. In this comprehensive guide, we will dive deep into learning what is AI in IT, exploring its fundamental concepts, applications, benefits, challenges, and future trends.

The purpose of this blog is to demystify AI in IT, offering a comprehensive guide to readers who may be new to the subject or looking to deepen their understanding. We will cover the basic principles of AI, explore its myriad applications in IT, discuss the benefits and challenges associated with AI implementation, showcase real-world examples, and look ahead to future trends. By the end of this guide, you’ll have a solid grasp of what AI in IT is all about and how it can shape the future of your organization.

What is AI in IT?

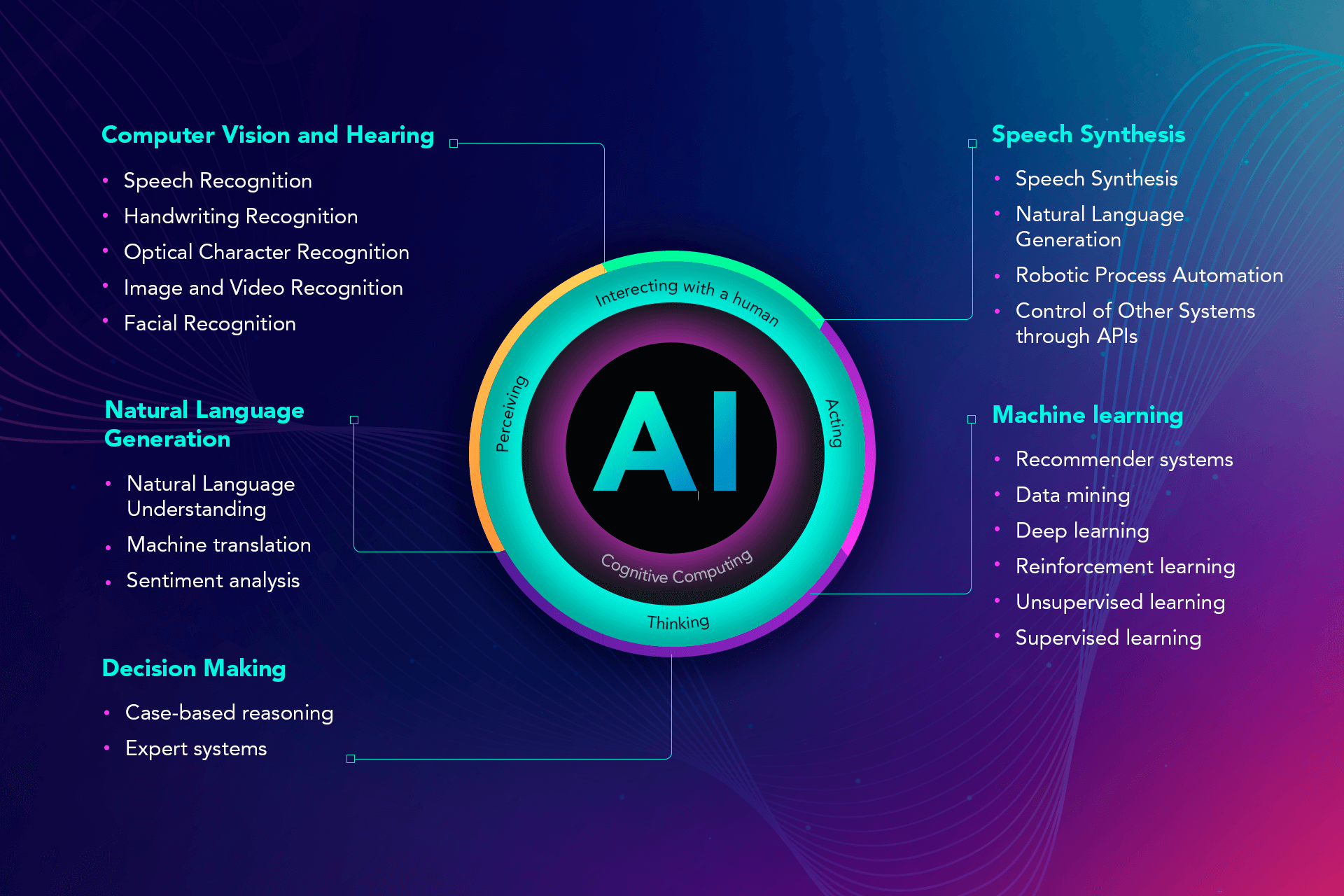

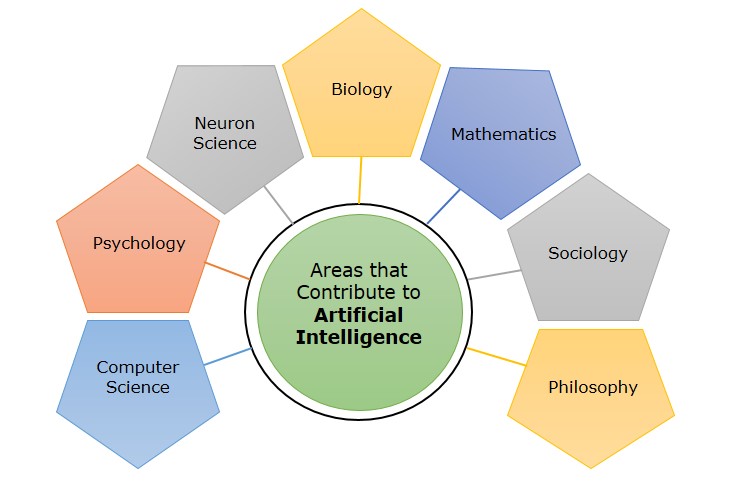

Artificial Intelligence (AI) is the simulation of human intelligence processes by machines, especially computer systems. It encompasses a wide range of technologies and techniques that enable computers to perform tasks that typically require human intelligence. In the context of IT, AI is the use of these technologies to enhance and automate various functions within the IT ecosystem.

What is the significance and relevance of AI in IT?

The significance of AI in IT cannot be overstated. It has already had a profound impact on the IT industry and is poised to revolutionize it further. AI in IT is about more than just automating repetitive tasks; it’s about leveraging data-driven insights, enhancing decision-making, and providing a competitive edge. From data analytics to cybersecurity, AI is changing the way we approach and solve IT challenges.

What are the basic principles of AI?

At its core, AI seeks to mimic human intelligence. This involves the ability to learn from experience, adapt to new situations, understand and process natural language, recognize patterns, and make decisions. Machine learning, deep learning, and natural language processing are some of the key subfields of AI that enable computers to perform these tasks.

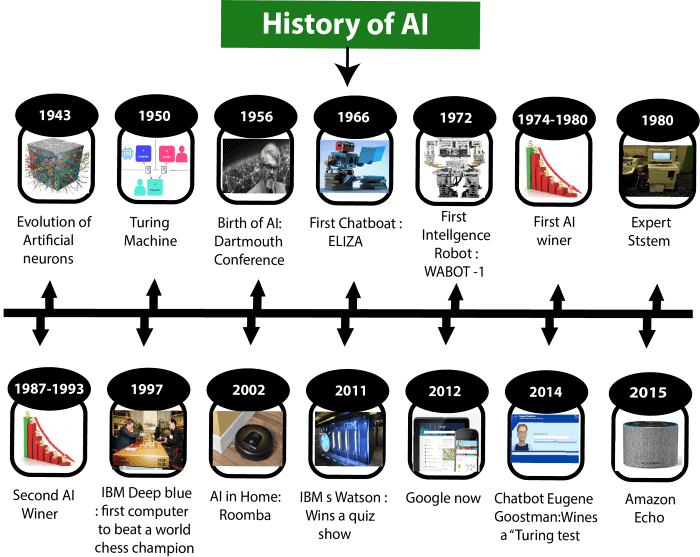

What is the history and evolution of AI in IT?

The roots of AI can be traced back to ancient history when philosophers and scientists attempted to create mechanical devices that could simulate human thought. However, the modern field of AI was born in the mid-20th century when computer scientists began to develop algorithms that could perform tasks requiring human intelligence. As computing power has grown, AI has advanced significantly, and it has found numerous applications in IT.

What are the key components of AI in IT?

AI in IT relies on several essential components:

Data

AI systems require vast amounts of data to learn and make informed decisions. In IT, this data can come from various sources, such as system logs, user interactions, and sensor data.

Algorithms

These are the mathematical and statistical models that AI systems use to analyze data and make predictions. Depending on the problem at hand, different algorithms are employed, such as regression, decision trees, and neural networks.

Machine Learning

This is a subset of AI that focuses on creating algorithms that can learn and improve from experience. Supervised learning, unsupervised learning, and reinforcement learning are common approaches within machine learning.

Neural Networks

Inspired by the human brain, neural networks are a class of machine learning models that excel at tasks like image and speech recognition.

Natural Language Processing (NLP)

NLP enables computers to understand, interpret, and generate human language. It is pivotal in applications like chatbots, language translation, and sentiment analysis.

Computer Vision

This technology allows machines to interpret and understand visual information, making it valuable for tasks like image recognition and video analysis.

These components form the building blocks of AI in IT, enabling systems to perform tasks ranging from data analysis and predictive maintenance to chatbots and autonomous IT operations.

What are the applications of AI in IT?

Artificial Intelligence has found applications in various domains of IT, transforming the way organizations operate and manage their technology infrastructure.

AI in Data Analytics and Business Intelligence

The data-driven nature of IT makes it an ideal playground for AI. AI algorithms can sift through vast datasets, identifying trends and patterns that would be impossible for humans to discern. In data analytics, AI models can generate insights that inform decision-making processes and drive business strategies. These insights help in optimizing resource allocation, understanding customer behaviour, and predicting future trends.

AI in business intelligence extends beyond just data analysis. It can provide real-time dashboards, automate reporting, and even make recommendations. For example, AI-powered recommendation engines can suggest products to online shoppers, increasing sales and customer satisfaction.

AI in Cybersecurity

In the realm of IT, security is paramount. AI has become a formidable ally in identifying and mitigating threats. With the ability to analyze vast amounts of network traffic and system logs, AI-driven cybersecurity solutions can detect anomalous behaviour and respond in real-time. This proactive approach to cybersecurity can prevent data breaches, malware attacks, and other security incidents.

Furthermore, AI is instrumental in developing predictive security measures. By analyzing historical data and recognizing patterns, AI can anticipate potential threats and vulnerabilities, allowing IT teams to take preemptive action.

AI in IT Operations and Infrastructure Management

The daily operations of IT infrastructure are intricate and susceptible to errors. AI streamlines these operations by automating routine tasks, such as system updates, patch management, and routine maintenance. For instance, AI-driven systems can monitor the performance of servers, network devices, and applications in real-time. When anomalies are detected, the system can trigger automated responses, such as scaling resources up or down to meet demand.

In the domain of infrastructure management, AI enables predictive maintenance. Through data analysis, AI systems can predict when equipment is likely to fail and trigger maintenance before the failure occurs. This not only reduces downtime but also extends the life of the hardware, saving organizations substantial costs.

AI in Customer Support and Service Desk

AI-powered chatbots and virtual assistants have revolutionized customer support. These bots are available 24/7, providing quick responses to customer inquiries. They can handle common issues and questions, leaving human support agents to deal with more complex problems. Chatbots can also collect customer data and preferences, making customer interactions more personalized.

In the service desk domain, AI can be employed to create intelligent ticketing systems. These systems can categorize, prioritize, and route tickets to the most appropriate support personnel, improving response times and resolution rates.

AI in Software Development and Testing

AI is not limited to operational tasks in IT. It has found its way into the software development life cycle. AI-driven tools can automate coding tasks, generate code from natural language specifications, and assist in debugging. This speeds up the development process and reduces the risk of human error.

Moreover, AI aids in software testing by automating the testing process. AI-powered testing tools can simulate user interactions, run tests at scale, and identify defects or vulnerabilities faster than manual testing. This accelerates software delivery while ensuring quality.

What are the benefits of AI in IT?

The integration of AI in IT brings a multitude of benefits to organizations.

Improved Efficiency and Productivity

AI-driven automation of repetitive tasks not only reduces the risk of human error but also increases efficiency. This leads to faster task completion, reduced operational costs, and more time for IT professionals to focus on strategic activities.

Cost Reduction and Optimization

AI’s predictive capabilities can optimize resource allocation. By forecasting demand, AI ensures that resources are allocated efficiently, reducing wasted capacity and saving money. Additionally, AI in cybersecurity can prevent costly security breaches.

Enhanced Decision-Making

AI systems analyze data from multiple sources and offer insights that aid decision-making. IT professionals can make more informed choices regarding system upgrades, resource allocation, and security measures.

Predictive Maintenance and Reliability

AI’s ability to predict equipment failures and maintenance needs ensures that systems remain reliable. This minimizes downtime, keeps operations running smoothly, and extends the lifespan of equipment.

Customer Experience Improvement

Incorporating AI in customer support and service desk operations ensures rapid and consistent responses to customer inquiries. This enhances the customer experience and fosters loyalty.

What are the challenges and limitations of using AI in IT?

While the benefits of AI in IT are substantial, it’s essential to acknowledge the challenges and limitations.

Data Privacy and Security Concerns

The extensive use of AI requires large volumes of data, which raises concerns about data privacy and security. Organizations must ensure that sensitive data is adequately protected.

Skill and Talent Gap

Implementing AI in IT requires skilled professionals who understand both the technology and the specific needs of the organization. There’s a shortage of AI talent, making recruitment and retention challenging.

Ethical and Bias Issues

AI algorithms can inherit biases from training data, leading to discriminatory outcomes. Ethical considerations are paramount, and organizations must actively work to eliminate biases in AI.

Integration Challenges

Integrating AI into existing IT infrastructure can be complex. Compatibility issues, data silos, and the need for custom solutions are common hurdles.

Regulatory and Compliance Hurdles

Compliance with data protection and AI regulations, such as GDPR, is critical. Organizations must navigate legal and regulatory frameworks to ensure they are using AI responsibly.

What are some real-world examples of using AI in IT?

Let’s explore some real-world examples of organizations that have successfully implemented AI in IT.

Case Study 1: Netflix

Netflix utilizes AI to recommend personalized content to its users. By analyzing user preferences and viewing history, their recommendation engine keeps users engaged and subscribed.

Case Study 2: IBM Watson

IBM’s Watson is a well-known AI system used in various industries. In healthcare, it aids in diagnosis and treatment planning, while in finance, it helps with risk assessment and fraud detection.

Case Study 3: Google

Google’s search algorithm utilizes AI to deliver more relevant search results. It also employs AI in its self-driving car project and language translation services.

These examples illustrate the diverse applications of AI in IT and how it can transform business processes.

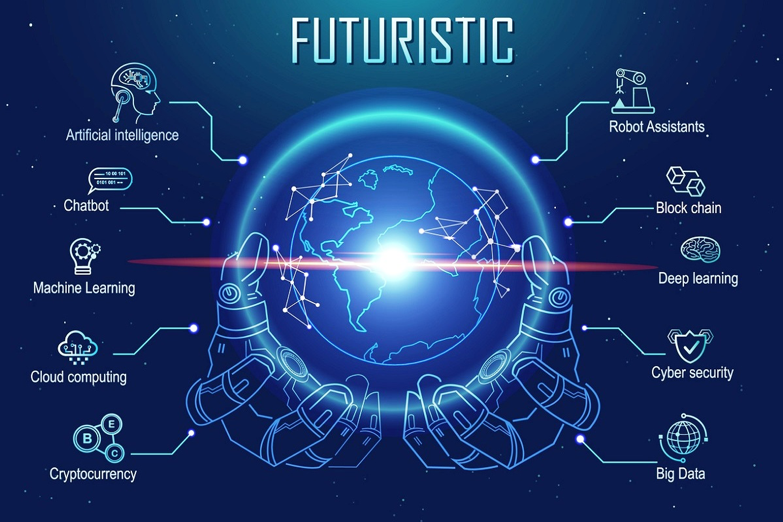

What are the future trends in AI for IT?

As AI continues to advance, several exciting trends are emerging in the IT sector.

Quantum Computing and AI

Quantum computing holds the potential to revolutionize AI in IT. Quantum computers can perform complex calculations exponentially faster than classical computers. This technology will enhance AI capabilities, particularly in areas like cryptography, optimization problems, and large-scale data analysis.

AI Automation and Autonomy

AI systems are becoming increasingly autonomous, capable of making decisions and taking actions without human intervention. This autonomy extends to IT operations, where AI-driven systems can respond to incidents, allocate resources, and make real-time adjustments. Self-healing systems are a growing trend in IT, enabling infrastructure to maintain peak performance and reliability.

AI Ethics and Regulation

With the growing use of AI, there’s an increasing focus on ethical considerations and regulations. AI ethics involves ensuring that AI systems are transparent, accountable, and free from bias. Governments and industry bodies are working on regulations to govern AI use, particularly in areas like data privacy and AI in critical infrastructure.

The role of AI in the Internet of Things (IoT)

The IoT is a rapidly expanding field with vast data generated by connected devices. AI plays a crucial role in making sense of this data. It can analyze and interpret IoT data, enabling predictive maintenance, improving operational efficiency, and creating smarter, more connected environments.

AI-ML Convergence

Machine learning and AI are converging. AI is becoming more accessible to a broader audience through user-friendly machine learning platforms. This convergence empowers IT professionals to develop and deploy AI models without deep expertise in data science.

How to Get Started with AI in IT?

If you’re intrigued by the potential of AI in IT and want to explore its benefits, here are some steps to get started:

Building AI Expertise within IT Teams

Invest in training and upskilling your IT teams. Encourage them to learn about AI and machine learning through courses, workshops, and certifications. Consider creating a dedicated AI team within your organization.

Tools and Platforms for AI Implementation

Explore AI tools and platforms that fit your IT requirements. Popular platforms like TensorFlow, PyTorch, and Scikit-learn can help you develop AI models. Cloud service providers also offer AI services that are accessible and scalable.

Identifying the Right Use Cases for AI in Your Organization

Not all AI applications are suitable for every organization. Identify use cases that align with your business goals and challenges. Start with pilot projects to test the waters before scaling AI initiatives.

Collaboration and Partnerships

Consider collaborating with AI experts and solution providers. Partnering with organizations that specialize in AI can accelerate your AI journey and provide valuable insights.

Wrapping Up!

In this comprehensive guide, we’ve explored the world of AI in IT, from its basic principles to its vast applications, benefits, and challenges. AI is transforming the IT landscape, offering efficiency, cost reduction, enhanced decision-making, reliability, and improved customer experiences. It’s not without its challenges, including data privacy concerns, skill shortages, and ethical considerations.

As we look to the future, quantum computing, increased autonomy, ethics, IoT integration, and AI-ML convergence promise to shape the IT industry. To embrace this transformation, organizations should start by building AI expertise, selecting the right tools and platforms, identifying use cases, and seeking collaboration and partnerships.

The importance of AI in IT will only continue to grow, making it imperative for organizations to adapt and leverage AI technologies to remain competitive and meet the evolving needs of the digital era.

As AI continues to advance, staying informed and engaged in this dynamic field will be a valuable asset for your organization’s success.

Happy Learning!